Three Common Beliefs about Serverless You Can Ignore

Serverless is the rising darling of the cloud world. At least one in three (33%) organizations have deployed serverless apps within the last year. (Source: Digital Ocean Q2 2018 Developer Survey) Of respondents to a CNCF 2018 survey, 38% indicated using serverless today. Another 26% plan to use the technology in the next twelve months.

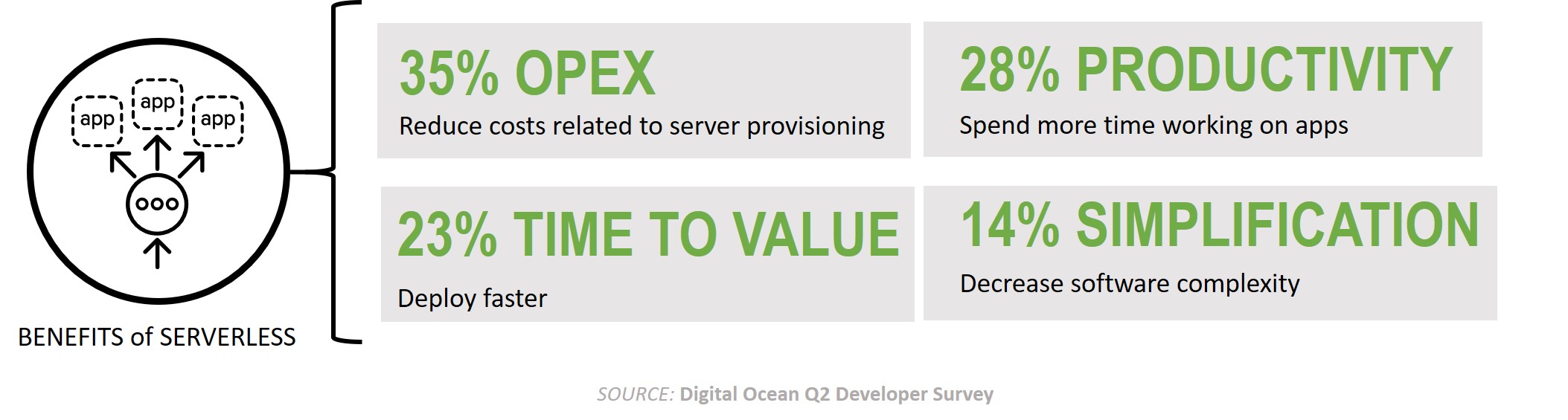

This fledging cloud option is not only rising fast, it's often misunderstood and attributed with almost supernatural powers to reduce costs, speed time to value, and make you breakfast in bed.

And if that wasn't enough to confuse you, there's the conflation of Function as a Service (FaaS) with Serverless. The two are not the same, which causes other misunderstandings about how a typical enterprise might take advantage of this fab new technology.

So today we're going to bust three common myths I've heard repeatedly from customers and conference attendees over the past six months. Because understanding what the technology is - and isn't - is necessary before you can figure out if it's worth your time to explore.

Let's start by straightening out the difference between serverless and FaaS.

Myth: Serverless === FaaS

Serverless is a system. A platform. A framework. It is best described as a just-in-time, elastic execution environment. Serverless seeks to eliminate operational overhead and friction by executing something on-demand in some kind of isolated environment. That isolated environment is usually a container, but offerings that use virtual machines and Web Assembly also exist. For the sake of brevity I'll use "container" to broadly mean all three.

Serverless is event-driven. This means provisioning and processing is initiated by some kind of trigger, like the arrival of an API request or the clock hitting 2:07pm. It could be an automatically generated event or an interactive one like pushing a button on a form in a web app. In a serverless model, the event initiates the execution of something that exists in a "container".

NOTE for NETOPS: F5 iRule savvy readers can relate Serverless events to iRule events, e.g. "HTTP_REQUEST" and "HTTP_RESPONSE". The model is much the same - when an EVENT happens, execute some code. Sorry, most Serverless frameworks don't support TCL, but they often support node.js and Python.

The "container" is often loaded from a repository at the time it is requested (cold start) or may be already waiting (warm start). Whatever exists in that "container" executes and returns a response to whatever system triggered it to run.

The business model behind serverless is usually based on paying only for the resources consumed while the "container" is executing. The operational model is to remove everything about operating the environment from the picture and let folks worry about building "something".

That's Serverless. Function as a Service is a specific use of serverless that defines "something" as "a function."

FaaS allows us to take the decomposition of applications that began with microservices to its ultimate conclusion - functions.

Knowing that there is a difference between Serverless and Function as a Service is important to debunking our next myth.

Myth: Refactoring required

Because of the conflation of FaaS and Serverless, many in the market are under the mistaken impression that in order to take advantage of Serverless you will have to refactor your application into its composite functions.

Serverless does not require you to refactor your application, or design new apps at a functional level. Serverless can just as easily execute a "container" with any kind of application, process, daemon, or function. As long as it's packaged into a "container" and can be invoked, it can execute in a Serverless context.

I've also seen successful implementations that take advantage of Serverless to extend (modernize) existing application architectures. Batch-style and out-of-band processing of registrations, order fulfillment, and other non-critical-path processes may be implemented in a Serverless model without completely refactoring traditional applications. Applications which already take advantage of external, API-based integration for such purposes will find Serverless an easier fit. Established client-server based applications will almost certainly require some modification to take advantage of Serverless, but it is not the extensive undertaking a complete refactor would require.

Processes that execute only occasionally - based on specific events that happen sporadically or unpredictably - can also be a good fit for Serverless. It's far more cost-efficient to fire up a "container" on a periodic basis to execute something than it is to keep that "container" running all the time. That's true for both public and on-premises Serverless.

Which brings us to the third of our myths, which focuses on location.

Myth: Serverless Doesn't Live On-premises

I've heard both IT folk from far and wide as well as pundits make this claim. It is as patently false as the notion that you couldn’t run "cloud" on-premises. You most certainly can and according to Developer Economics State of the Developer Nation, a healthy percentage of organizations are.

Larger, more heavily used applications can also benefit from very efficient scaling, only needing to pay for extra resources for the specific parts of the application under heavy load, and also more easily able to identify and optimise those. This advantage for larger applications is probably a major reason we see 17% of current serverless computing adopters running a solution in their own data centre.

Cost efficiency gains in an on-premises serverless implementation go beyond scalability. The ability to reuse the same resources and share them across applications for which there is sporadic use is not insignificant. Serverless also adds the ability to bolt on value-add capabilities to applications and operational functions by taking advantage of its core, event-driven nature. Its use on-premises should not be dismissed lightly without understanding that serverless benefits go well-beyond eliminating operational friction from developers' daily lives. That's certainly a boon, but it isn't the only benefit and certainly isn't the only reason organizations are experimenting with Serverless on-premises.

So the reality is that Serverless can be and is being implemented on-premises. The aforementioned CNCF Survey digs into the most popular "installable" Serverless platforms:

- Kubeless (42% up from 2%)

- Apache OpenWhisk (25% up from 12%)

- OpenFaas (20% up from 10%)

There are more, and sure to be others as this technology picks up speed.

Both Serverless and Function as a Service are enjoying incredible adoption rates as organizations tinker, toy, and evaluate the technology for use in both new, cloud-native applications and in modernization efforts. Recognizing and ignoring common misconceptions is an important first step to deciding if the technology is a good fit for applications in your organization.