Container Security Basics: Orchestration

If you're just jumping into this series, you may want to start at the beginning:

Container Security Basics: Introduction

Container Security Basics: Pipeline

According to Tripwire's 2019 State of Container Security, just about one in three (32%) of organizations are currently operating more than 100 containers in production. A smaller percentage (13%) are operating more than 500. And 6% of truly eager adopters are currently managing more than 1000. There are few organizations operating containers at scale without an orchestration system to assist them.

The orchestration layer of container security focuses on the environment responsible for the day to day operation of containers. By the data available today, if you’re using containers, you’re almost certainly taking advantage of Kubernetes as the orchestrator.

It’s important to note that other orchestrators exist, but most of them are also leveraging components and concepts derived from Kubernetes. Therefore, we’ll focus on the security of Kubernetes and its components.

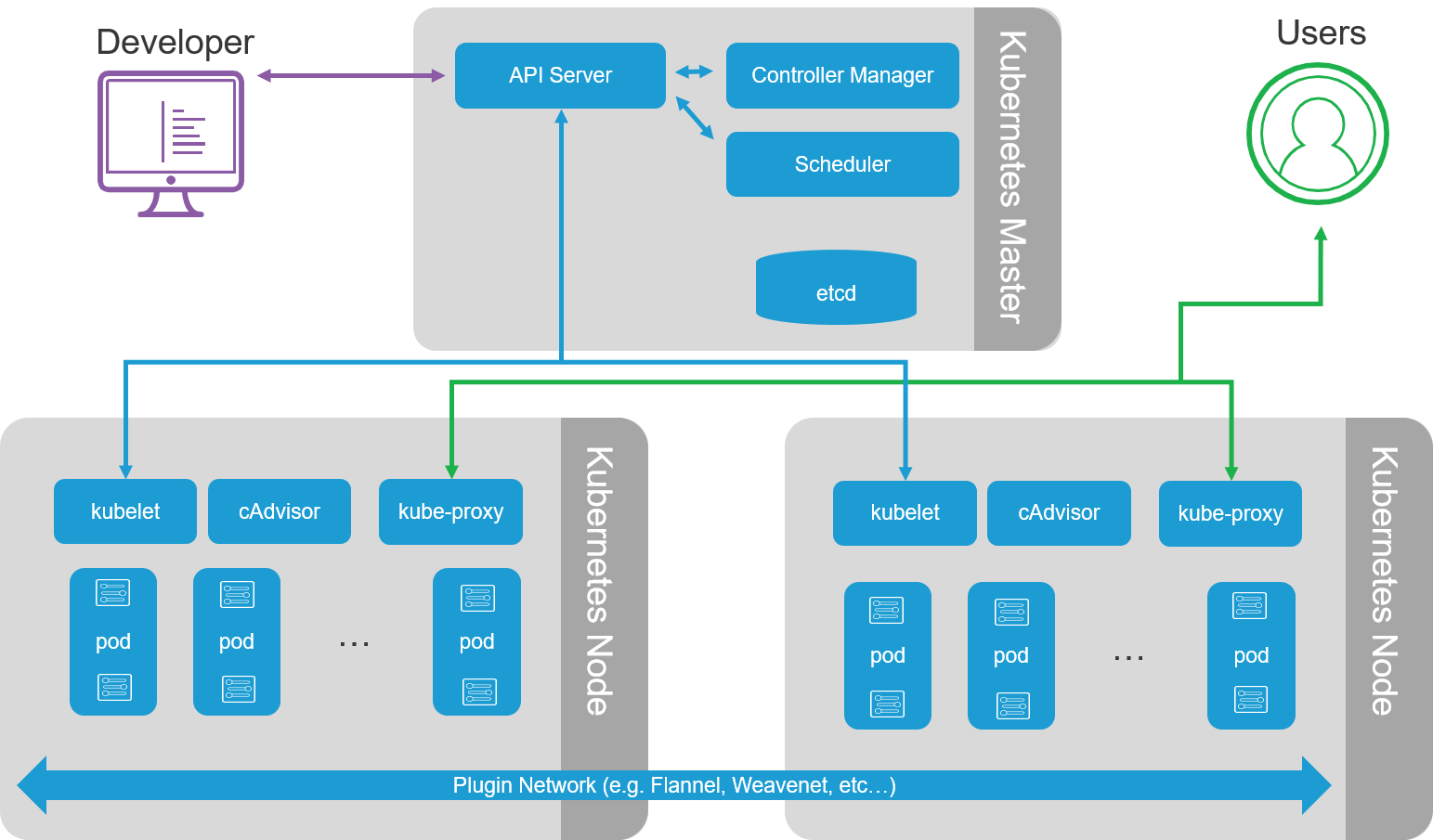

The Kubernetes Environment

There are multiple moving parts that make up Kubernetes. This makes it more challenging to secure not only because of the number of components involved, but the way in which those components interact. Some communicate via API. Others via the host file system. All are potential points of entry into the orchestration environment that must be addressed.

Basic Kubernetes environment

A quick overview of core components requiring attention:

- API Server and Kubelet

- Pods

- Etcd

That means grab a cup of coffee, this one’s going to take a bit more time to get through.

1. Authentication is not optional

Astute readers will note this sounds familiar. You may have heard it referenced as Security Rule Two, a.k.a. Lock the Door. It’s a common theme we’ll continue to repeat because it’s often ignored. Strong authentication is a must. We’ve watched as the number of security incidents due to poor security practices with respect to containers continues to rise. And one of the common sources is failure to use authentication, usually when deployed in the public cloud.

Require strong credentials and rotate often. Access to the API server (via unsecured consoles) can lead to a “game over” situation because the entire orchestration environment can be controlled through it. That means deploying pods, changing configurations, and stopping/starting containers. In a Kubernetes environment, the API server is the “one API to rule them all” that you want to keep out of the hands of bad actors.

How to secure the API server and Kubelet

It’s important to note that these recommendations are based on the current Kubernetes authorization model. Always consult the latest documentation for the version you are using.

- Enable mTLS everywhere

- Dedicate a CA per service – k8s, etcd, applications

- Rotate certificates & beware of private key permissions on disk

- Only bind to secure addresses

- Beware of API config “insecure-bind-address” and “insecure-port”

- Apply this to _all_ services (SSH, Vault, etcd)

- Disable “Anonymous Auth”

- Does “Anonymous auth” sound like a good idea?

- Default disabled as of 1.5, enabled manually with “--anonymous-auth=True”

- Enable Authorization

- Don’t use “--authorization-mode=AlwaysAllow”

- API Server should have “--authorization-mode=RBAC,Node”

- Kubelet should have “--authorization-mode=Webhook”

- Restrict Kubelet to only have access to resources on its own node.

It’s important to note that these recommendations are based on the current Kubernetes authorization model. Always consult the latest documentation for the version you are using.

- Kubernetes Authorization Reference

- Kubelet Authentication and Authorization Reference

2. Pods and Privileges

Pods are a collection of containers. They are the smallest Kubernetes component and dependent on the Container Network Interface (CNI) plugin, all pods may be able to reach each other by default. There are CNI plugins which can use “Network Policies” to implement restrictions on this default behavior. This is important to note because pods can be scheduled on different Kubernetes nodes (which are analogous to a physical server). Pods also commonly mount secrets, which can be private keys, authentication tokens, and other sensitive information. Hence the reason they’re called ‘secrets’.

That means there are several concerns with pods and security. At F5, we always assume a compromised pod when we begin threat modeling. That’s because pods are where application workloads are deployed. Because application workloads are the most likely to be exposed to untrusted access, they are the most likely point of compromise. That assumption leads to four basic questions to ask in order to plan out how to mitigate potential threats.

- What could an attacker see or do with other pods given access to a pod?

- Can an attacker access other services in the cluster?

- What secrets are automounted?

- What permissions have been granted to the pod service account?

The answers to these questions expose risks that could be exploited if an attacker gains access to a pod within the cluster. Mitigating the threats of pod compromise requires a multi-faceted approach involving configuration options, privilege control, and system level restrictions. Remember that multiple pods can be deployed on the same (physical or virtual) node, and thus share operating system access – usually a Linux OS.

How to mitigate Pod threats

Kubernetes includes a Pod Security Policy resource which controls sensitive aspects of a pod. It enables operators to define the conditions under which a pod must operate in order to be allowed into the system and enforces a baseline for the pod Security Context. Never assume defaults are secure. Implement secure baselines and verify expectations to guard against pod threats.

Specific options for a Pod Security Policy should address the following:

- Avoid privileged containers

- Look for “—allow-privileged” setting on the kube-api and/or kubelet

- Look for “privileged: true” in the pod specification.

- Reduce security risk with “--allow-privileged=false”

- The default container user with Docker is root

- Avoid running any workload as root

- Pod Security Policy can use “runAsUser”, “runAsGroup” and “runAsNonRoot: true”

- Similar options can be defined in a Dockerfile

- Disallow privilege escalation within a container

- “allowPrivilegeEscalation: false”

- Use SELinux / AppArmor

- SELinux will make use of Multi Category Security to confine containers from each other

- Leave enabled and work through the denials

- Disabling or changing to permissive mode severely reduces the security

- Prevent Sym link / Entrypoint attacks

- Attackers can overwrite the entrypoint binary or create bad symlinks

- Avoid this by setting “readOnlyRootFilesystem: true”

- Avoid host* configuration options

- Attackers with access to the host resources are more likely to further their compromise into the host and/or adjacent containers

- Avoid options like hostPID, hostIPC, hostNetwork, hostPorts

- readOnly hostPath mounts

- If necessary to configure read/write into the host, be careful with the deployment and certainly don’t make this a default practice

- Use “readOnly” on all allowedHostPaths to avoid attacks

- Seccomp profiles

- Reduce threat surface by limiting the allowed system calls

- Docker default will reduce some but it is a generic profile

- Application specific profiles will provide the greatest security benefit

3. Etcd

Etcd is the store for configuration and secrets. Compromise here can enable extraction of sensitive data or injection of malicious data. Neither is good. Mitigating threats to etcd means controlling access. That’s best accomplished by enforcing mTLS. Secrets are stored by Kubernetes in etcd as base64 encoded. Consider the use of an alpha feature, “encryption provider”, for stronger options when storing sensitive secrets or consider using HashiCorp Vault.

Read the next blog in the series:

Container Security Basics: Workload

Jordan Zebor

Jordan Zebor Lori MacVittie

Lori MacVittie