Bot Defense Should be a Key Component of a Proactive Security Strategy

Usually when we talk about app protection we immediately focus on web application firewalls. Specifically, we tend to focus on the OWASP Top Ten and application layer vulnerabilities that can lead to becoming just another security statistic.

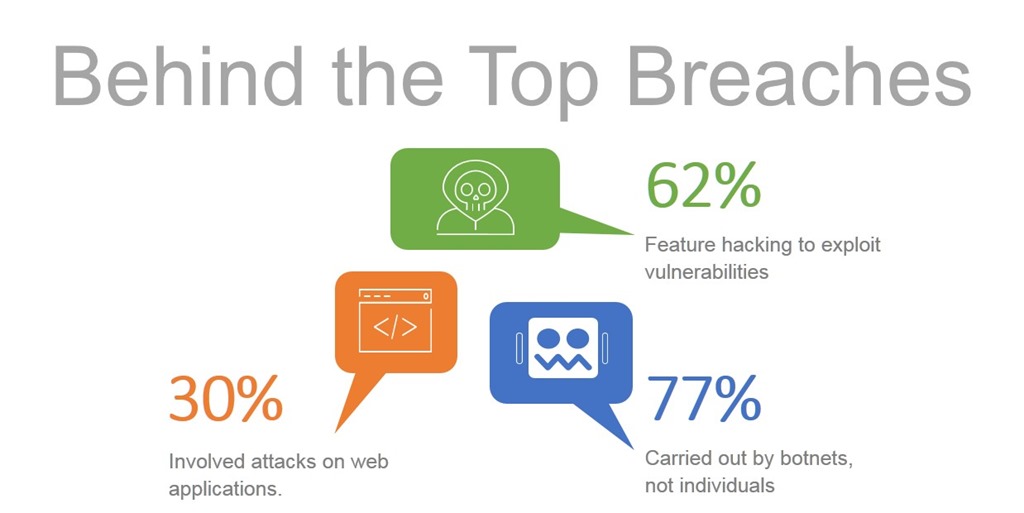

That’s not a bad thing, as data from Verizon and other sources tell us that 62% of the top breaches featured exploitation of vulnerabilities, like those described by the OWASP Top Ten. We know from White Hat Security research that most apps have at least one critical vulnerability, and that it remains unpatched for much of an entire year.

So to say most apps have a vulnerability is not hyperbole.

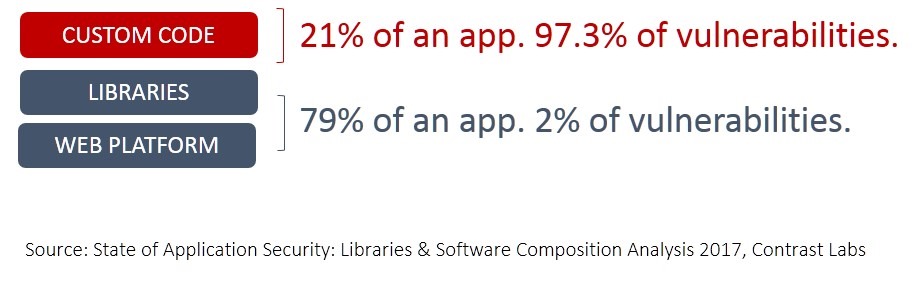

The difference between those vulnerabilities and the ones that tend to get exploited is they aren’t publicly disclosed as a CVE. Custom code may contain the bulk of vulnerabilities in an app, but finding them requires time and patience and money. It’s much more efficient from an attackers perspective to go after framework and platform vulnerabilities that are publicly disclosed as a CVE.

And we know they do. Just recently we saw a highly critical vulnerability in Drupal exploited in conjunction with an earlier discovered vulnerability in the CMS platform. What is frightening is the speed with which these vulnerabilities were exploited. According to an article on the vulnerability at Dark Reading:

“Drupal administrators last Wednesday rushed out an out-of-cycle security release warning about a highly critical vulnerability (CVE-2018-7602) affecting Drupal 7.x and 8.x versions. The new vulnerability — related to an even more severe and somewhat incompletely fixed flaw (CVE-2018-7600) from March — potentially gives threat actors multiple ways to attack a Drupal site, maintainers of the open source CMS platform warned.

But barely hours after the advisory was posted, attackers began actively exploiting the flaw to try, among other things, to upload cryptocurrency miners on vulnerable sites or to use compromised sites to launch distributed denial-of-service attacks. In virtually no time at all — and certainly before a vast majority of site owners had an opportunity to upgrade or apply mitigations — thousands of host systems around the world became potential targets for compromise.”

Given the speed with which attackers were not only able to find but exploit vulnerable host systems, one can reasonably conclude that reconnaissance and attacks were carried out in an automated fashion, most likely using bots.

As Dark Reading notes, this particular attack brings to the fore the problem of patching and disclosure. We know that it often takes as long as 60 days before an organization is even aware they are vulnerable, and twice that to address it with a patch.

You can’t mitigate what you aren’t aware of, and even if organizations were aware in the first 24 hours, patching processes often take much longer.

So the problem becomes how do you stop such attacks?

Given that a significant number of discovery and exploitation – particularly of published vulnerabilities (CVE) – are carried out via bots, a proactive security posture may prevent attackers from even discovering your systems are vulnerable.

Proactive Security includes Bot Defense

Bot defense – often included as one of the capabilities of an advanced Web Application Firewall (WAF) – is one way to reduce risk and prevent bots from successfully finding and subsequently exploiting the vulnerabilities we all know exist in just about every application. Employing bot defense also has an “icing on the cake” benefit in reducing resource consumption by bad actors. Reducing consumption lightens load, which means better performance for legitimate users and systems. Distil Networks, which has been tracking ‘bad bots’ for years, notes in its latest report that ”in 2017, bad bots accounted for 21.8 percent of all website traffic, a 9.5 percent increase over the previous year.”

Nearly 22% of website traffic are bad actors. One in five. Basically, if you needed five servers (instances, if we’re talking cloud) to match demand, you could reduce that to four by eliminating access by bad bots before they get near your systems.

Patching is a must. But the reality is that patching timelines are not shrinking as fast as attackers are able to exploit new vulnerabilities. I remain a strong proponent of virtual patching - using the capabilities of a programmable proxy or WAF to virtually ‘patch’ a vulnerability until patches can be applied to the affected systems. But organizations will be hard pressed to match the speed of attackers when timelines demand less than 24 hours to get either kind of patch in place.

Employing a bot defense service can mitigate some of that risk because it isn’t concerned about what vulnerability a bot is trying to exploit. A bot defense service is purely focused on whether the client is a bad bot, a good bot, or a user. Bot defense may be the best way to block discovery and exploitation in the hours or days before you can get a patch or other mitigating solution in place.

That’s one of the building blocks of a proactive security strategy: stopping attempts by bots and other bad actors before they have the opportunity to explore apps, APIs, and data.

Stay safe out there!